Using AWK and R to parse 25tb

Intro

IntroRecently I was put in charge of setting up a workflow for dealing with a large amount of raw DNA sequencing (well technically a SNP chip) data for my lab. The goal was to be able to quickly get data for a given genetic location (called a SNP) for use for modeling etc. Using vanilla R and AWK I was able to cleanup and organize the data in a natural way, massively speeding up the querying. It certainly wasn’t easy and it took lots of iterations. This post is meant to help others avoid some of the same mistakes and show what did eventually work.

The Data

The data was delivered to us by our university’s genetics processing center as 25 TB of tsvs. Before handing it off to me, my advisor split and gzipped these files into five batches each composed of roughly 240 four gigabyte files. Each row contained a data for a single SNP for a single person. There were ~2.5 million SNPS and ~60 thousand people Along with the SNP value there were multiple numeric columns on things like intensity of the reading, frequency of different alleles etc. All told there were around 30 columns with frustratingly unique values.

The Goal

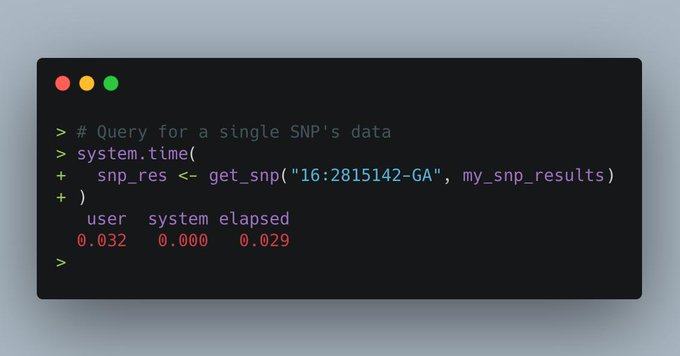

As with any data management project the most important thing is to consider how the data will be used. In this case what we will mostly be doing is fitting models and workflows on a SNP by SNP basis. I.e. we only will need a single SNP’s data at a time. I needed to make it as easy, fast, and cheap as possible to extract all the records pertaining to one of the 2.5 million SNPs.

Read full article >>>

Comments

Post a Comment