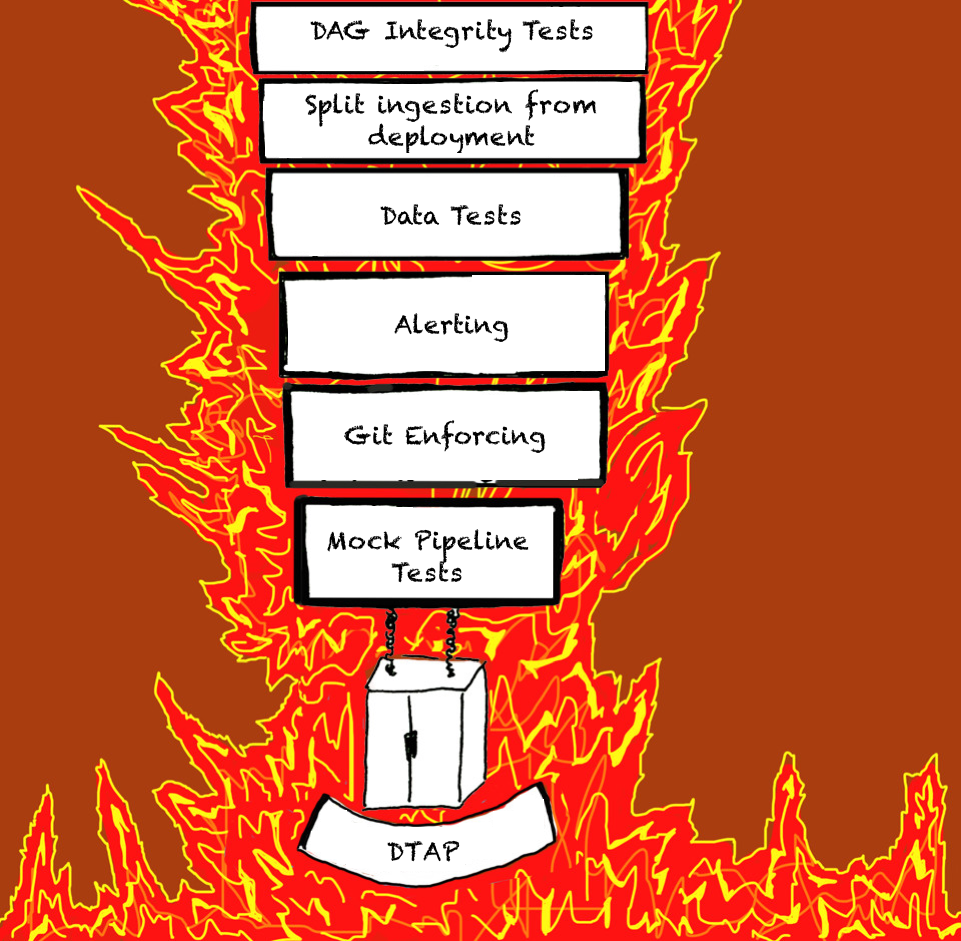

Data’s Inferno: 7 Circles of Data Testing Hell with Airflow

Real data

behaves in many unexpected ways that can break even the most

well-engineered data pipelines. To catch as much of this weird behaviour

as possible before users are affected, the ING Wholesale Banking

Advanced Analytics team has created 7 layers of data testing that they

use in their CI setup and Apache Airflow pipelines to stay in control of

their data. The 7 layers are:

- DAG Integrity Tests; have your CI (Continuous Integration) check if you DAG is an actual DAG

- Split your ingestion from your deployment; keep the logic you use to ingest data separate from the logic that deploys your application

- Data Tests; check if your logic is outputting what you’d expect

- Alerting; get slack alerts from your data pipelines when they blow up

- Git Enforcing; always make sure you’re running your latest verified code

- Mock Pipeline Tests; create fake data in your CI so you know exactly what to expect when testing your logic

- DTAP; split your data into four different environments, Development is really small, just to see if it runs, Test to take a representative sample of your data to do first sanity checks, Acceptance is a carbon copy of Production, allowing you to test performance and have a Product Owner do checks before releasing to Production

We

have ordered the 7 layers in order of complexity of implementing them,

where Circle 1 is relatively easy to implement, and Circle 7 is more

complex. Examples of 5 of these circles can be found at: https://github.com/danielvdende/data-testing-with-airflow.

We

cannot make all 7 public, 4 and 5 are missing, as that would allow

everyone to push to our git repository, and post to our Slack

channel :-).

Comments

Post a Comment